- Vision Quest

- Posts

- This Week in Tech 64

This Week in Tech 64

NVIDIA's founder reveals latest AI plans, Google makes Gemini more useful and Disney's robots are really coming to life

Welcome to the cutting edge ⚔️

Read time: 8 min

Today’s Slate

NVIDIA GTC 2025 - the latest and greatest from the legend Jensen Huang

Checkout Google’s latest image generation tool that could crush Photoshop

Stability’s new model can turn an image into a 3D scene

Google’s Gemini is shifting toward becoming an AI-powered workspace

Meta’s Quest system continues to evolve and provide more tools for developers

Robocop: Streets of Anarchy is the latest in their line of VR experiences

NVIDIA and Google team up with Disney to power their robots

The. Future. Is. Here.

Artificial Intelligence

At a glance

New AI supercomputers: NVIDIA introduced DGX Spark, the world’s smallest AI supercomputer, and DGX Station, a high-performance desktop AI workstation.

Next-gen GPUs: Blackwell Ultra GPUs will launch in late 2025, followed by Vera Rubin GPUs, promising major performance upgrades.

Quantum computing focus: March 20 is designated as "Quantum Day," highlighting NVIDIA’s growing interest in quantum computing advancements.

Robotics & AI models: NVIDIA announced GR00T N1, a foundation model for robots, and Newton, a robotics platform developed with Disney and Google DeepMind.

Stock & market impact: NVIDIA’s stock faces pressure amid competition from DeepSeek’s AI models, making this GTC a crucial moment for the company.

Our vision

NVIDIA is doubling down on AI computing across robotics, quantum computing, and GPUs, positioning itself as the backbone of next-gen AI systems. The DGX Spark and DGX Station signal a push toward accessible high-performance AI, while Blackwell Ultra and Vera Rubin GPUs reinforce NVIDIA’s dominance in deep learning. Robotics investments, particularly in AI-driven automation, hint at a future where NVIDIA powers real-world autonomous systems. However, with increased competition from emerging AI players like DeepSeek, NVIDIA must continue innovating aggressively to maintain its market leadership.

At a glance

Gemini 2.0 Flash expands image generation: Google is opening its experimental native image generation to developers via Google AI Studio and the Gemini API.

Multimodal capabilities: The model can generate images alongside text, edit images through conversation, and leverage world knowledge for more realistic visuals.

Better text rendering: Compared to competitors, Gemini 2.0 Flash excels at accurately generating images with readable text, making it useful for ads and social posts.

Developer access: Available now for experimentation, with Google seeking feedback to refine it before a full production release.

Our vision

Google's Gemini 2.0 Flash pushes AI-generated imagery into dynamic, multimodal experiences, enabling more intuitive content creation for interactive storytelling, design, and AI assistants. If refined successfully, this could reshape digital media, creative workflows, and real-time AI interactions, giving Google a competitive edge in AI-generated visual content over OpenAI’s DALL·E and Stability AI.

POV: You're already late for work and you haven't even left home yet. You have no excuse. You snap a pic of today's fit and open Gemini 2.0 Flash Experimental.

— Riley Goodside (@goodside)

12:53 AM • Mar 14, 2025

At a glance

Stable Virtual Camera launched: Stability AI introduced a model that turns 2D images into immersive 3D-like videos with realistic depth and movement.

AI-powered virtual camera: Users can generate videos from up to 32 images with dynamic camera paths like “Spiral” and “Dolly Zoom.”

Research preview: Currently available for noncommercial use on Hugging Face, with some limitations in handling humans, animals, and complex textures.

Stability AI’s comeback efforts: Following financial struggles and leadership changes, Stability has launched multiple new AI models and added James Cameron to its board.

Our vision

Stable Virtual Camera signals a leap forward for AI-driven 3D content creation, making immersive scene generation more accessible. While it currently has quality limitations, future iterations could redefine filmmaking, virtual production, and metaverse applications. As AI-generated 3D content gains traction, Stability AI's success will depend on its ability to refine its models and commercialize its innovations in a competitive market.

At a glance

Canvas for interactive collaboration: A new real-time editing space for writing and coding within Gemini, allowing users to refine documents and code seamlessly with AI assistance.

Code generation and preview: Developers can prototype web apps, scripts, and interactive projects directly in Canvas, preview HTML/React, and iterate on designs without switching tools.

Audio Overview for files: Converts documents, slides, and research reports into podcast-style AI discussions, summarizing content dynamically for on-the-go learning.

Global rollout: Both Canvas and Audio Overview are launching for Gemini and Gemini Advanced subscribers, with Audio Overview initially in English and expanding to other languages soon.

Our vision

Gemini is shifting toward becoming an AI-powered workspace, blending content generation, live collaboration, and interactive coding into a single tool. Features like Canvas and Audio Overview position it as a multimodal AI assistant that goes beyond chat-based interactions, competing with Microsoft Copilot and Notion AI. As AI becomes a more proactive creative partner, expect deeper integration of voice, visuals, and code automation, transforming how we create, learn, and develop software.

Spatial Computing

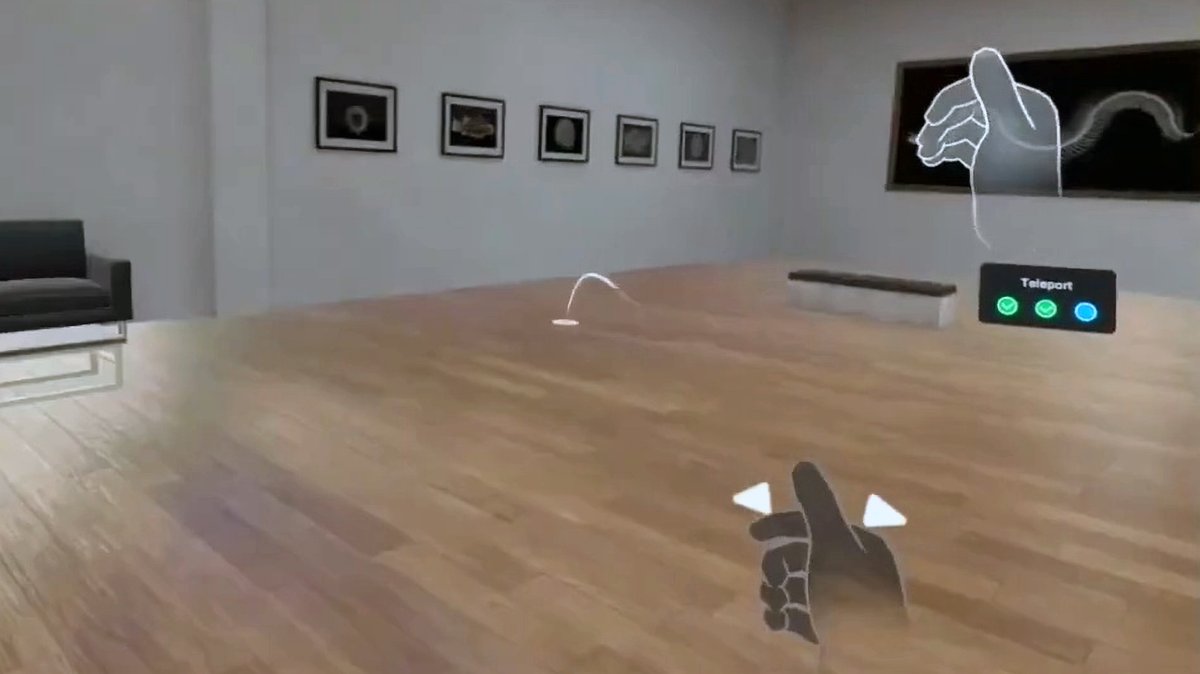

At a glance

Passthrough Camera API availability: Developers can now experiment with Quest’s passthrough camera API but cannot yet integrate it into store apps.

Supported devices: The feature is available for Quest 3 and Quest 3S as an experimental release.

Functionality: Apps can access forward-facing color cameras with metadata like lens intrinsics and headset pose for computer vision models, enabling use cases like QR code scanning, game board tracking, and enterprise applications.

Technical details: Provides up to 1280×960 resolution at 30FPS with 40-60ms latency, making it unsuitable for tracking fast-moving objects or small text.

Integration: Works via Android’s Camera2 API and OpenXR, making it compatible with future Android XR platforms, including Samsung’s standalone headset.

Our vision

Meta's passthrough camera API marks a major step in mixed reality development, unlocking more interactive and context-aware applications. While store app support is still pending, this API bridges the gap between digital and physical spaces, enabling dynamic AR overlays, AI-powered object recognition, and enhanced user interactions. As developers refine their implementations, this technology could lay the groundwork for next-gen MR experiences, particularly in enterprise, gaming, and AI-assisted environments. Given its cross-platform potential, it may also serve as a key building block for Google and Samsung’s upcoming XR ecosystem, ensuring broader industry adoption.

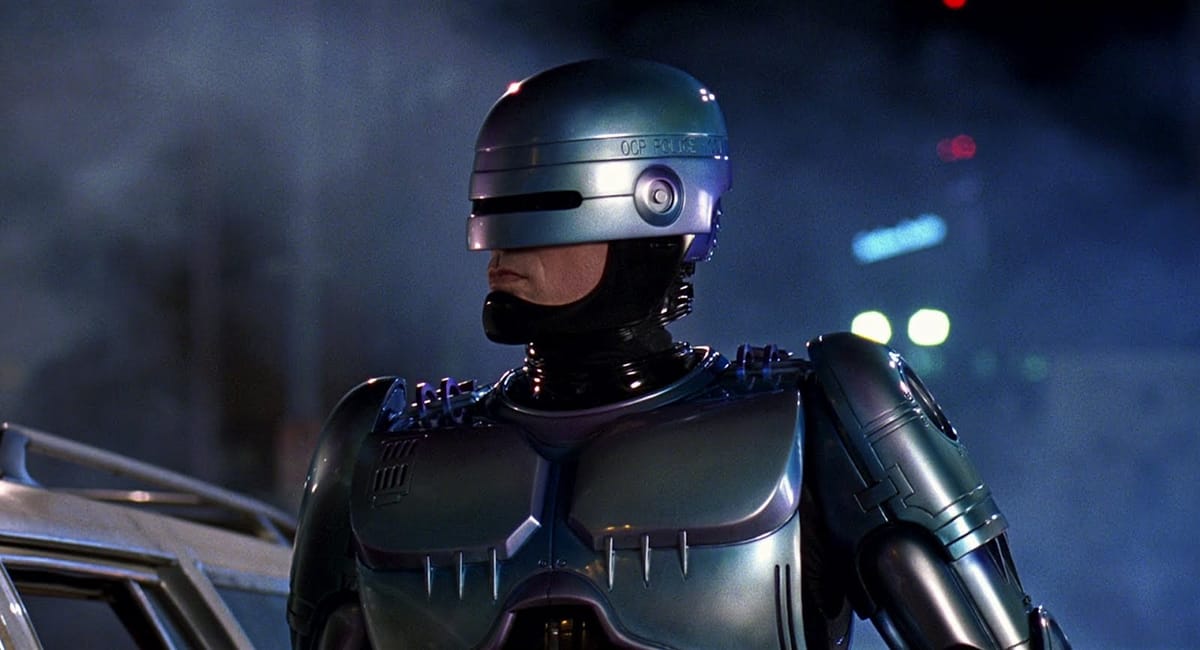

At a glance

RoboCop: Streets of Anarchy announced: A VR arcade shooter based on the classic sci-fi film is coming in late 2025, developed by Coffee Moth Games in partnership with Amazon-MGM.

Not coming to Quest 3 or PC: Initially listed for Quest 3 and PC, but developers clarified it's an arcade-exclusive title and updated the website accordingly.

First official RoboCop VR adaptation: While modders have experimented with VR versions of RoboCop games, this marks the first official VR game in the franchise.

Developer experience: Coffee Moth Games has prior VR experience, working on titles like Neon Overdrive (VR roguelike shooter) and Gummy Bear and Friends: Flushed Frenzy (arcade exclusive).

Our vision

RoboCop: Streets of Anarchy continues the trend of franchise-driven VR experiences, but its arcade exclusivity limits accessibility for most players. While arcade VR offers higher-fidelity, location-based experiences, it misses the growing home VR market led by Quest, PC VR, and PSVR2. If successful, this could encourage more arcade-exclusive IP-based VR games, though a home release may still be possible in the future.

At a glance

Thumb microgestures: Quest SDK v74 adds new gestures, allowing users to swipe and tap their index finger for intuitive, controller-free interactions.

Passthrough camera API: Developers can now access the headset’s forward-facing color cameras, enabling custom computer vision applications.

Improved Audio To Expression: Enhancements to Meta’s AI-powered facial animation model improve expressivity, mouth movement accuracy, and non-speech vocalization.

Future AR applications: The microgesture system aligns with Meta’s sEMG neural wristband and upcoming HUD glasses, potentially making Quest a development platform for next-gen AR devices.

Our vision

Meta is advancing natural, hands-free interaction in spatial computing, reinforcing Quest’s role as a VR and AR development hub. Microgestures push controller-free input closer to mainstream adoption, while improved Audio To Expression enhances avatar realism for social VR. The passthrough camera API expands computer vision possibilities, supporting more sophisticated mixed reality experiences. These updates signal Meta’s broader strategy: refining intuitive, lightweight interactions for a future where wearables replace traditional interfaces.

Robotics

At a glance

Nvidia’s Newton engine: A new physics engine co-developed with Disney Research and Google DeepMind to enhance robotic movement.

Disney’s AI-powered droids: Newton will power Star Wars-inspired BDX droids coming to Disney parks next year.

Open source release: Nvidia plans to release Newton’s early version later in 2025.

More expressive robots: Newton improves AI precision for handling objects like food and fabric.

DeepMind integration: Compatible with MuJoCo for advanced robotic simulations.

More Nvidia AI news: Announced Groot N1 for humanoid robots, next-gen AI chips, and personal AI computers.

Our vision

Newton marks a significant step forward in AI-driven robotics, enabling more natural movement and interaction in real-world settings. By enhancing Disney’s entertainment robots, this technology is setting the stage for more immersive theme park experiences and advancing the broader field of robotics. As an open-source tool, Newton could accelerate the development of AI-powered robotics in gaming, mixed reality, and virtual environments, making robotic interactions more lifelike and adaptable across industries.

Transportation

At a glance

Tesla receives permit: Granted a transportation charter permit (TCP) in California, allowing company-owned vehicles with employee drivers to provide prearranged rides.

Not a robotaxi permit: The TCP does not allow autonomous vehicle testing or deployment, unlike permits held by Uber and Lyft.

Employee transport first: Tesla will initially use the permit for transporting employees and must notify regulators before offering rides to the public.

No AV authorization: Tesla has not applied for or received permission to operate driverless vehicles in California and would need additional permits to do so.

Texas robotaxi plans: Tesla aims to launch a robotaxi service in Austin by June, using its Full Self-Driving software.

Our vision

Tesla's permit is a stepping stone toward broader transportation ambitions but remains far from its vision of an autonomous ride-hailing service. While California remains restrictive, Tesla is pivoting to Texas, where regulatory hurdles may be lower. The long-term play hinges on achieving regulatory approval for driverless operations, which will determine Tesla’s ability to compete in the growing autonomous mobility market.

How did you like this week's edition? |

Learn AI in 5 minutes a day

This is the easiest way for a busy person wanting to learn AI in as little time as possible:

Sign up for The Rundown AI newsletter

They send you 5-minute email updates on the latest AI news and how to use it

You learn how to become 2x more productive by leveraging AI