- Vision Quest

- Posts

- This Week in Tech 54

This Week in Tech 54

NVIDIA kicks off CES with a bang, MIT walks us through their top 10 breakthroughs and Agentic AI is making waves

Welcome to the cutting edge ⚔️

Read time: 6 min

Today’s Slate

CES is underway - check out the latest announcements and product reveals

NVIDIA’s keynote puts their progress on display

MIT walks us through their top 10 breakthrough technologies for 2025

Agentic AI is about to change how work gets done

You can use an Apple Vision Pro to help train humanoid robots

Computer chips designed by AI are weird - yet perform better than human designed chips

The. Future. Is. Here.

CES 2025

We gotta be honest - there’s too much going on at CES to cover in this newsletter. Listing some of the key updates so far but there’s so many announcements and reveals in Vegas this week. We’ll have to get on the frontlines next year and provide a special in person report.

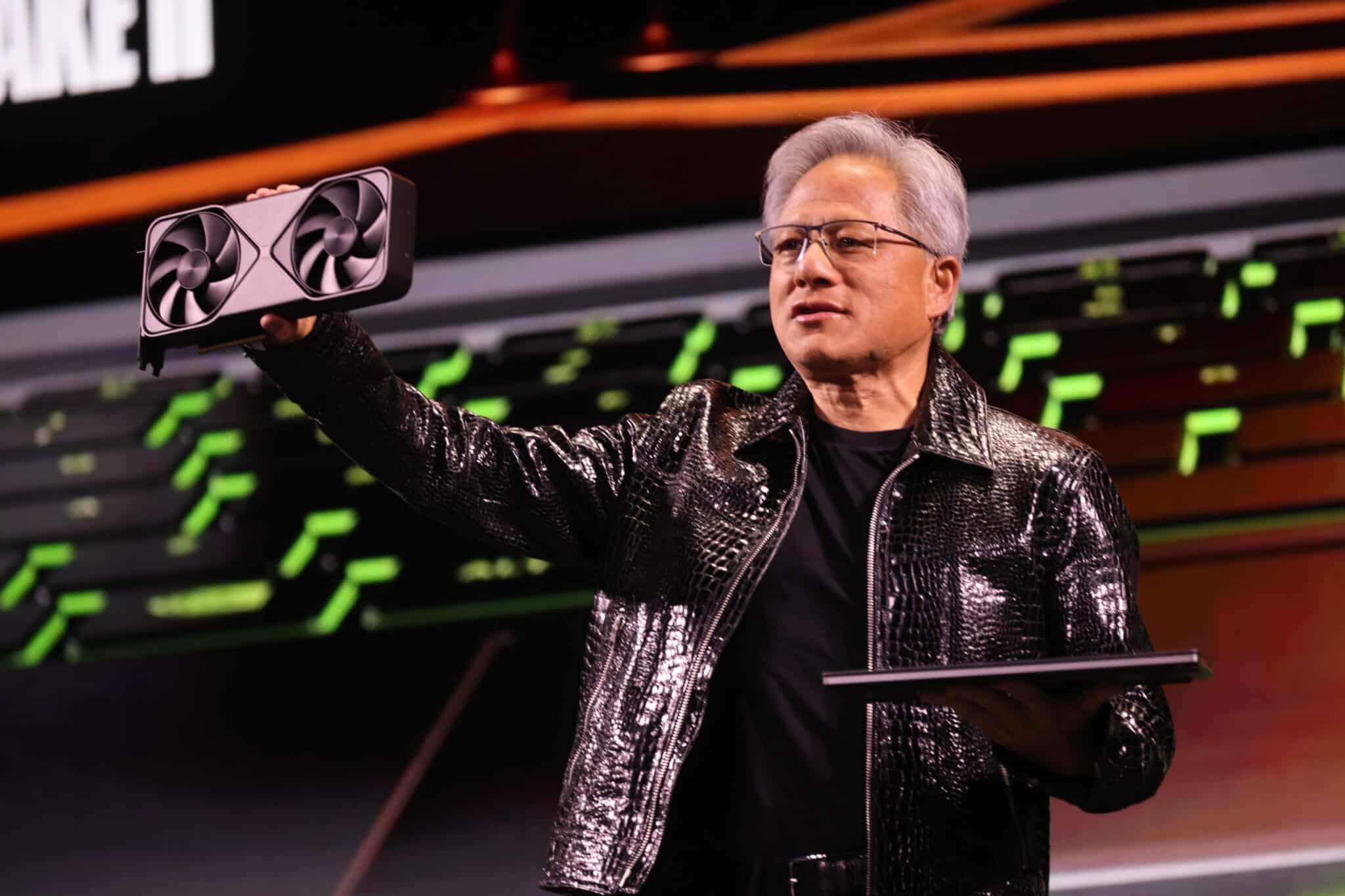

The highlight so far in our opinion has been the NVIDIA Keynote seen here:

Additional CES 2025 Announcements

Next Week

We’ll come back to wrap up CES in next week’s edition that will most likely be fully dedicated to a CES recap plus any other important updates from around the world of tech.

MIT Innovation Issue

Small Language Models: Companies like OpenAI and Google are optimizing smaller AI models for focused tasks, balancing efficiency and sustainability.

Generative AI Search: Tools like Google’s Gemini-powered AI Overviews are reshaping internet search by offering concise, context-aware responses.

Vera C. Rubin Observatory: This Chile-based telescope aims to map dark matter and energy with its 3.2-gigapixel camera, offering groundbreaking insights into the universe.

Long-acting HIV Medications: Innovations like lenacapavir by Gilead promise transformative impacts in HIV prevention.

Cleaner Jet Fuel: Advances in sustainable aviation fuels aim to reduce carbon emissions from air travel.

Fast-learning Robots: AI and robotics converge for more adaptable, efficient industrial and consumer robots.

Stem-cell Therapies: Breakthroughs in regenerative medicine promise to revolutionize treatments for chronic diseases.

Green Steel: Initiatives like Sweden’s fossil-free steel plant showcase industrial sustainability.

Robotaxis: Autonomous vehicles, led by companies like Waymo are finally entering the mainstream.

Cattle Methane Mitigation: Innovative feed additives reduce emissions, aiding climate efforts.

Our vision

AI and related technologies will continue integrating into daily life, prioritizing efficiency, accessibility and sustainability. Small, task-specific AI models will dominate enterprise solutions, while breakthrought in generative AI and robotics will enhance search, logistic and personal assistants. While Waymo and their AI navigation is making strides I did just see a video of someone going in circles in a parking lot in their Waymo ride saying they should’ve just stuck with Uber. We’ll also have to wait and see if Tesla can deliver on their latest event showing their new autonomous vehicles as well.

Artificial Intelligence

At a glance

Level 1—Reactive Agents: Respond to predefined inputs without learning or memory, useful for automating repetitive tasks like customer support chatbots.

Level 2—Task-Specialized Agents: Excel in specific domains, such as fraud detection or route optimization, often outperforming humans in narrowly defined tasks.

Level 3—Context-Aware Agents: Adapt to ambiguity and complexity, using real-time and historical data for dynamic decision-making in fields like medicine, finance, and urban planning.

Level 4—Socially Savvy Agents: Incorporate emotional intelligence to interpret human emotions and intent, enabling empathetic customer interactions and nuanced negotiations.

Level 5—Self-Reflective Agents: Hypothetical agents capable of introspection and self-improvement, potentially revolutionizing fields like manufacturing and marketing through autonomous strategy evolution.

Level 6—Generalized Intelligence Agents: Aiming for adaptability across domains, akin to human intelligence, with the ability to unify insights from multiple business functions.

Level 7—Superintelligent Agents: Theoretical systems surpassing human intelligence, capable of solving global challenges, optimizing complex systems, and redefining industries.

Our vision

The evolution of AI agents will likely accelerate, with context-aware and socially savvy agents becoming mainstream across industries like healthcare, finance, and customer service. Companies will also edge closer to self-reflective agents, leveraging advances in generative AI and reinforcement learning for autonomous decision-making. The broader landscape will be marked by a race to balance ethical AI development with competitive pressures, as organizations explore transformative applications for AI agents while preparing for the eventual arrival of generalized intelligence.

At a glance

Purpose: AI assistant for GeForce RTX users, simplifying PC optimization and performance tuning.

Features: Real-time diagnostics, system optimization, peripheral control, and exportable performance metrics.

AI Model: Powered by an efficient Llama-based SLM, running locally on RTX GPUs for offline functionality.

Community Integration: Extensible via GitHub plugins and adopted by partners like MSI, Logitech, and HP.

Launch: February 2025 via the NVIDIA App for GeForce RTX users.

Our vision

NVIDIA’s launch of G-Assist isn’t just a new feature—it’s a statement about their expanding role as the backbone of AI-powered ecosystems. Known for powering everything from gaming to autonomous vehicles and AI research, NVIDIA is uniquely positioned to integrate AI assistants directly into hardware, bridging cutting-edge computation with everyday usability. Over the next few years, G-Assist could evolve into a cornerstone for personal and professional productivity, demonstrating how deeply AI can enhance human-computer interaction. With NVIDIA’s ecosystem of GPUs, software, and partnerships, they’re building not just tools, but an infrastructure for the AI-driven future.

At a glance

AI-Powered Chip Design: Researchers at Princeton University and IIT Madras have developed an AI system capable of designing wireless chips in hours, compared to the weeks traditionally required by human engineers.

Strange but Effective Designs: The AI generates unconventional circuit patterns that deliver improved performance in speed, energy efficiency, and frequency range, surpassing traditional human designs.

Revolutionizing Complexity: The AI tackles the nearly infinite design possibilities of wireless chips by treating them as unified artifacts rather than assembling components one at a time.

Human Oversight Needed: While the AI accelerates development, human engineers remain essential to refine and correct errors or "hallucinated" elements in the designs.

Future Potential: The AI has already created broadband amplifiers and will next aim to design entire wireless chips, paving the way for more advanced systems in communication, radar, and autonomous technologies.

Our vision

The integration of AI into wireless chip design is a game-changer, merging human creativity with machine efficiency. By handling the overwhelming complexity of chip configurations, this technology unlocks new possibilities that were previously out of reach. As AI-generated designs push the boundaries of performance, it mirrors the leap Nvidia has made with tools like G-Assist and GR00T, where AI transforms the user experience.

In the next few years, expect AI-assisted designs to accelerate innovation across industries, making futuristic technologies like ultra-fast 6G networks and intelligent autonomous systems not just feasible but mainstream. It’s a collaborative future where AI redefines what’s possible while humans steer the creative vision.

Spatial Computing

At a glance

NVIDIA and Humanoid Robotics: NVIDIA is leveraging its GR00T platform, unveiled in 2024, to propel humanoid robotics forward, with key partners like Boston Dynamics, Agility Robotics, and Sanctuary AI.

Blueprint Modality: GR00T’s new feature, Blueprint, enables robots to learn tasks through imitation learning, where robots mimic human actions for effective skill acquisition, especially in environments like factories and warehouses.

Apple Vision Pro Integration: NVIDIA’s partnership with Apple allows Blueprint to use the Vision Pro headset to capture actions as digital twins. These digital twins are then used in simulation, enabling robots to execute tasks repeatedly and refine their performance.

Teleoperation: Remote teaching via teleoperation digitizes human actions, making it easier to program robots to perform complex tasks efficiently.

Our vision

NVIDIA’s integration of Blueprint with Apple Vision Pro underscores its leadership in bridging cutting-edge AI with robotics. By combining imitation learning with the precision of digital twins, NVIDIA is democratizing robotic training, bringing humanoids closer to mainstream deployment. This collaboration is a glimpse into the future where AI-driven robots become essential extensions of human labor, seamlessly adapting to dynamic real-world tasks. Given NVIDIA’s unmatched influence in AI and GPUs, it’s clear they aim to be the foundational layer of this robotic revolution.

How did you like this week's edition? |

There’s a reason 400,000 professionals read this daily.

Join The AI Report, trusted by 400,000+ professionals at Google, Microsoft, and OpenAI. Get daily insights, tools, and strategies to master practical AI skills that drive results.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25820460/DSCF4396.jpeg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25794233/247429_CES_Art_2025_S_Haddad_LEDE_2040x1360.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25820492/247466_CES_2025_Lenovo_ThinkBook_Plus_Gen6_rollable_laptop_ADiBenedetto_0002.jpg)