- Vision Quest

- Posts

- This Week in Tech 41

This Week in Tech 41

Big moves in AI as James Cameron joins the board of Stability and Meta releases new text-to-video Movie Gen

Welcome to the cutting edge ⚔️

Read time: 9 min

Today’s Slate

Meta releases their OpenAI Sora text-to-video competitor

OpenAI releases Canvas - a Cursor and Repl.it AI-enhanced coding environment

AI is watching your bumper stickers to better understand you

The race towards a smart glasses powered metaverse is reigniting with Samsung and Google teaming up

Taking a look at Tesla’s upcoming 10/10 Robotaxi event

Artificial Intelligence

At a Glance:

Meta's Movie Gen generates high-definition videos, including sound, from text prompts or edits existing footage with AI-powered tools.

Users can edit video styles, add elements, and manipulate transitions through text prompts.

Competitors like OpenAI's Sora and Google's offerings also aim to innovate in AI-generated video content.

Movie Gen integrates audio that aligns with visual elements, including background music and sound effects.

Vision:

Movie Gen positions Meta to compete aggressively in the burgeoning AI video generation space, enhancing creative workflows. Compared to Sora, Meta's tool combines video, audio, and text-based editing, paving the way for AI-driven content creation. This technology could revolutionize filmmaking, marketing, and content creation by reducing production times while empowering users with advanced creative control across multiple media formats.

At a Glance:

Our Vision:

OpenAI’s Canvas represents a major step toward enhancing productivity in generative AI, offering intuitive, collaborative workspaces that streamline project management for writing and coding tasks. Expect this tool to battle against the latest competition offering AI-assisted workflows, especially in professional and educational settings.

At a Glance

License Plate Recognition (LPR) technology, designed to capture vehicle plates, now collects images of political signs, bumper stickers, and more.

This data can be searched for non-license plate-related content like political affiliations or personal beliefs.

Over 15 billion vehicle images are collected by DRN Data, and concerns about privacy and potential misuse of this vast database are rising.

Our Vision

LPR technology’s evolution reveals an urgent need for privacy regulations, as its ability to capture personal information goes beyond traffic enforcement. If unregulated, this growing surveillance could lead to privacy breaches and misuse, making strong policy action critical.

At a Glance:

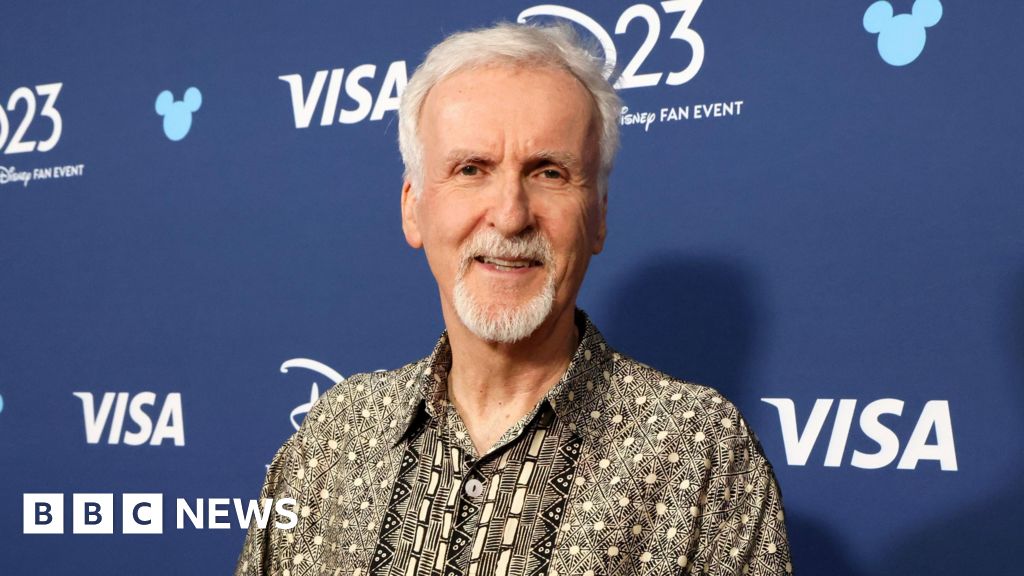

James Cameron, director of The Terminator, has joined StabilityAI's board, focusing on special effects using AI.

StabilityAI developed Stable Diffusion and is now creating Stable Video Diffusion for video generation.

Cameron aims to push the boundaries of CGI through generative AI.

Competition includes OpenAI’s Sora and MiniMax, with a copyright lawsuit from Getty Images over AI training data.

Our Vision:

James Cameron’s collaboration with StabilityAI highlights a shift in leveraging AI for storytelling and creative tools, bridging traditional filmmaking with cutting-edge generative AI for visual effects. Cameron was also critical in the development of novel technology that enabled the Avatar movie to deliver such high quality and immersive computer generated visuals.

His track record in developing and then using cutting edge for technology is a positive sign for his involvement in how AI will make an impact in content creation. This partnership could redefine how movies are made, enhancing visual creativity while facing challenges related to copyright and industry skepticism.

Spatial Computing

At a Glance:

Submerged is Apple’s first scripted, immersive film made for the Vision Pro headset.

Directed by Edward Berger, set during World War II, it follows sailors surviving a torpedo attack.

The film is designed for a 3D experience, making viewers feel like they are in the scene.

Our Vision:

The release of Submerged signals a significant step forward in immersive entertainment. By integrating 3D technology and scripted storytelling, Apple’s Vision Pro introduces new ways for audiences to experience cinema, marking an important evolution for virtual reality and enhancing the future of storytelling in digital spaces.

At a Glance

Target Product: The glasses will be simpler smart glasses rather than full AR, potentially branded as "Samsung Glasses."

Competition: Samsung and Google face challenges without Meta’s partner, EssilorLuxottica, which dominates the eyewear market.

Extended Partnership: Meta and EssilorLuxottica recently extended their Ray-Ban partnership into the 2030s, securing a competitive advantage.

Our Vision

Samsung and Google’s partnership signals a significant move toward wearable tech’s mainstream adoption. However, Meta’s extended partnership with EssilorLuxottica gives it a solid foothold in the market, which could limit the success of competitors. The introduction of more competitors can accelerate innovation, driving progress in consumer-grade AR and smart glasses for everyday use.

At a Glance

Epic’s Financial Recovery: After layoffs in 2023, Epic Games is now "financially sound," according to CEO Tim Sweeney.

Unreal Engine Evolution: The company plans to merge Unreal Engine and Fortnite Editor into Unreal Engine 6, offering ease of use for developers and creators.

Metaverse Plans: Epic is collaborating with Disney to create a persistent universe. It also aims to make assets interoperable across platforms like Minecraft and Roblox.

Legal Challenges: Epic continues its legal battles with Apple, Google, and now Samsung over mobile app store policies.

Our Vision

Epic’s long-term focus on metaverse tools and immersive technology is setting the stage for a more interoperable future in gaming and beyond. With projects like Unreal Engine 6 and partnerships with major brands like Disney, the company is pushing toward a future where digital worlds are interconnected.

However, legal battles and shifting metaverse interest highlight the challenges ahead. This is a crucial step for the evolution of both gaming and virtual reality, paving the way for more seamless and integrated experiences across platforms.

At a Glance:

The latest Philips Hue app update adds an augmented reality (AR) preview feature for iPhones and iPads with lidar sensors.

It allows users to visualize how Hue smart lamps would light up their space.

Other features include a "do not disturb" option for motion sensors and enhanced programming for Hue switches.

Our Vision:

Philips’ embrace of AR previews could streamline smart home planning, offering a more immersive, hands-on approach for consumers. It signals a future where users can personalize their lighting and automation with precise real-time insights.

This is a key piece of visualizing a digital twin over a physical environment. Being able to monitor a factory or diagnose an issue in your office by using AR superpowers is a very feasible and efficient future.

Transportation

At a Glance

Tesla's Robotaxi Day on October 10, 2024, is expected to be a pivotal moment for autonomous driving.

The event could reveal Tesla's fully self-driving $25K Cybercar and advancements in autonomous vehicle technology.

With over 3 million Tesla cars equipped with Full Self-Driving (FSD), this move could disrupt the ride-hailing industry, urban planning, and global transportation infrastructure.

Our Vision

Tesla's Robotaxi program could reinvent the transportation industry, reducing costs, traffic fatalities, and even the need for personal car ownership. This shift highlights the future of urban mobility, where autonomy will redefine transportation and reshape cities.

At a Glance:

GM is developing a new Level 3 (L3) autonomous driving system, allowing for hands-off, eyes-off driving on highways.

The system will expand on GM's current Super Cruise, which is a Level 2 hands-off, eyes-on system.

GM aims to offer the system on 750,000 miles of U.S. and Canadian roads by 2025.

Our Vision:

GM's L3 system represents a significant step in autonomous driving, positioning the company ahead of most automakers and advancing the industry towards fully autonomous highways, with safety and convenience in focus.

The autonomous driving level system ranges from Level 0 to Level 5:

Level 0: No automation; the driver controls everything.

Level 1: Driver assistance, such as cruise control or lane keeping.

Level 2: Partial automation; car can handle steering and acceleration, but driver must stay alert (e.g., Tesla Autopilot).

Level 3: Conditional automation; car can drive itself but driver must intervene when needed.

Level 4: High automation; car can handle most driving tasks but within specific conditions.

Level 5: Full automation; no driver input required.

How did you like this week's edition? |

Walaaxy, the world's #1 automated prospecting tool 🚀

Waalaxy is the world's #1 tool for automated LinkedIn prospecting, with over 150K users and a 4.8/5 rating from +1,200 reviews.

Why? Because it allows anyone, without any technical skills, to automate LinkedIn prospecting:

Reach out to 800 qualified prospects per month.

Capture your competitors' audience.

Test a market for your business.

And countless other use cases.

Turn LinkedIn into your #1 acquisition channel (and get a 10x ROI with the subscription).

/cdn.vox-cdn.com/uploads/chorus_asset/file/25658085/ai_label__1_.png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/23951570/VRG_Illo_STK186_L_Normand_TimSweeney_Neutral.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25662572/hue_app1.jpg)